P595 Feasibility and performance of a fully automated endoscopic disease severity grading tool for ulcerative colitis using unaltered multisite videos

R. Stidham1,2, H. Yao3, S. Bishu1, M. Rice1, J. Gryak3, H.J. Wilkins4, K. Najarian2,3,5

1University of Michigan, Department of Medicine - Division of Gastroenterology and Hepatology, Ann Arbor, USA, 2University of Michigan, Michigan Integrated Center for Health Analytics and Medical Prediction MiCHAMP, Ann Arbor, USA, 3University of Michigan, Department of Computational Medicine and Bioinformatics, Ann Arbor, USA, 4Lycera Corporation, Clinical Development, Plymouth Meeting, USA, 5University of Michigan, Department of Electrical Engineering and Computer Science, Ann Arbor, USA

Background

Endoscopic assessment is a core component of disease severity in ulcerative colitis (UC), but subjectivity threatens accuracy and reproducibility. We aimed to develop and test a fully-automated video analysis system for endoscopic disease severity in UC.

Methods

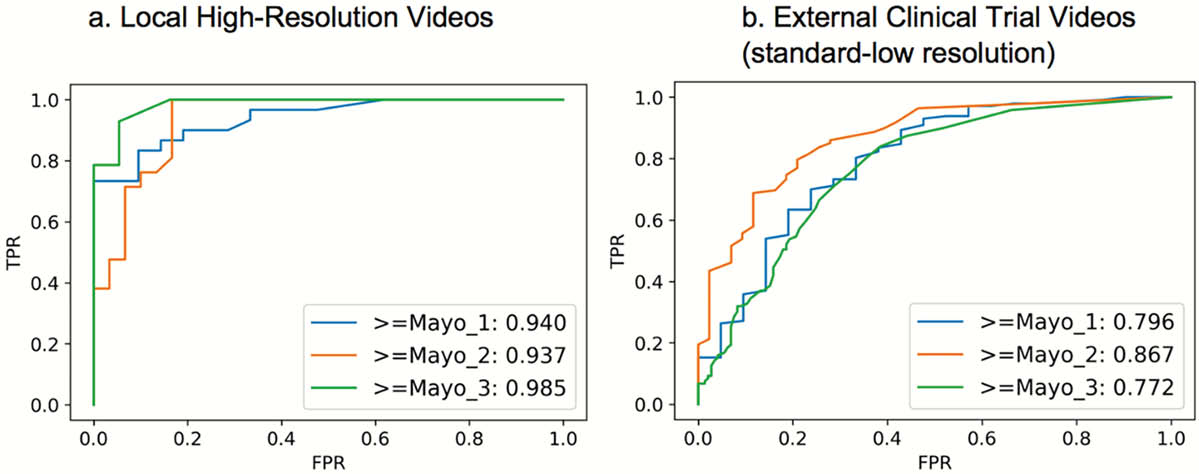

A developmental dataset of local high-resolution UC colonoscopy videos were generated with Mayo endoscopic scores (MES) provided by experienced local reviewers. Videos were converted into still images stacks and annotated for both sufficient image quality for scoring (informativeness) and MES grade (e.g. Mayo 0,1,2,3). Convolutional neural networks (CNNs) were used to train models to predict still image informativeness and disease severity grading with 5-fold cross-validation. Whole video MES models were developed by matching reviewer MES scores with the proportion of still image predicted scores within each video using a template matching grid search. The automated whole video MES workflow was tested in a separate endoscopic video set from an international multicenter UC clinical trial (LYC-30937-EC). Cohen’s kappa coefficient with quadratic weighting was used for agreement assessment.

Results

The developmental set included 51 high-resolution videos (Mayo 2,3 41.2%), with the multicenter clinical trial containing 264 videos (Mayo 2,3 83.7%,

Ordinal characteristics are shown for the automated process, predicting progressively increasing disease severity. TPR, true positive rate; FPR, false-positive rate.

Conclusion

Though premature for immediate deployment, these early results support the feasibility for artificial intelligence to approach expert-level endoscopic disease grading in UC.